Objective

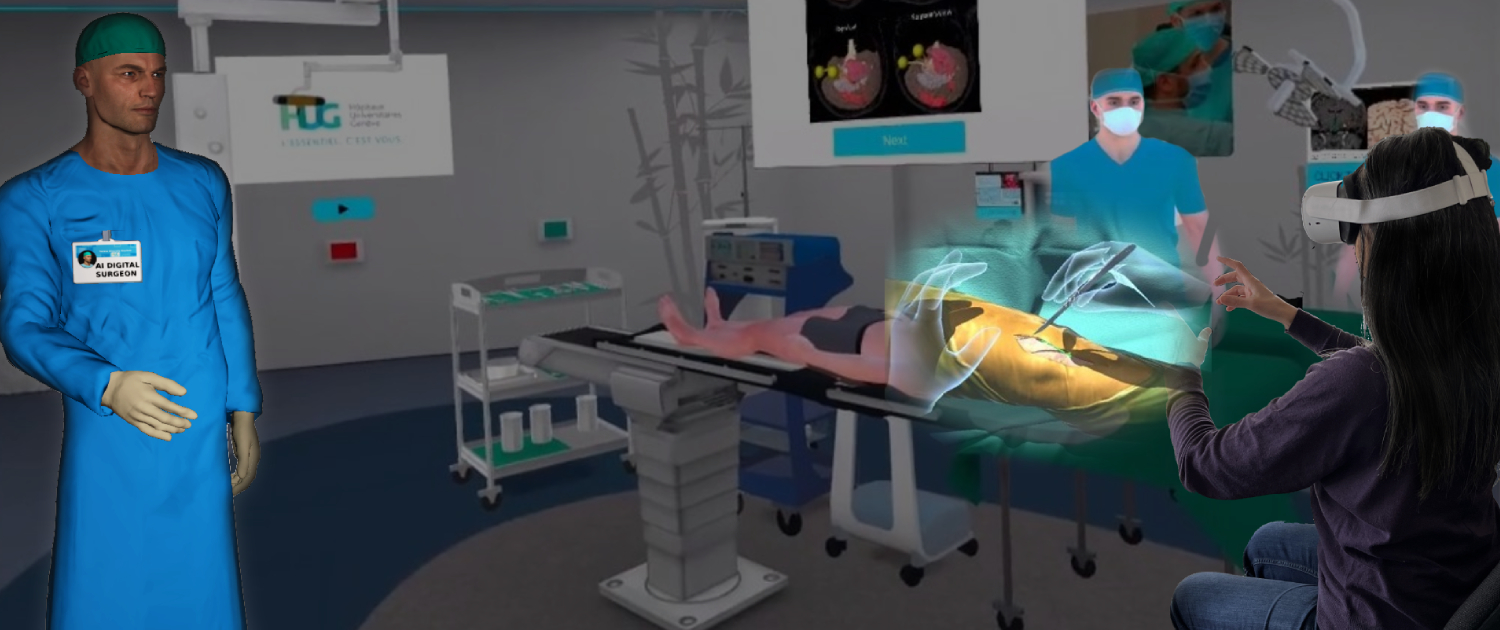

This project aims to design and model a 3D intelligent digital surgeon to guide trainees in virtual surgery. The digital surgeon will identify the trainee’s gestures by recognizing and analyzing the trainee’s hand/arm gestures and provide personalized assessment, real-time feedback, instructions, and recommendations. The proposed system will adopt a distributed software architecture taking advantage of edge-cloud resources and 5G networking to enable ultra-low latency untethered VR.

The IDS motion captured data containing digital surgeon’s know-how gestures are provided by Hospital of Geneva (HUG), and then visualized by MIRALab to allow digital representation of these surgeon’s gestures.

A deep learning model will be derived by UNIGE from recording skilled real surgeons’ gestures to assist the feedback decision engine of the digital surgeon. A distributed VR system architecture will be implemented utilizing edge computing and 5G networks by UNIGE.

ORamaVR will implement the deep learning model and in their MAGE system. Furthermore, ORama VR will embed methods of cutting and tearing of physics-based deformable surfaces that advance the state-of-the-art.

Partners

University of Geneva, University Hospital of Geneva, ORama S.A., MIRALab sarl.